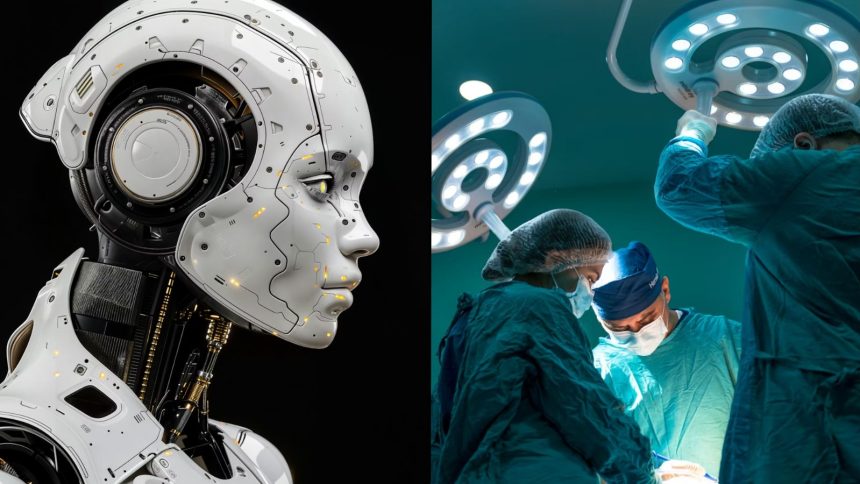

An AI-powered surgery tool has come under fire for allegedly injuring patients that it’s supposed to be helping heal, with hundreds of complaints being made.

Artificial intelligence has prompted plenty of advancements across different fields, but it’s becoming increasingly prevalent for medical use now, too. ChatGPT has a Health edition designed to help people “better understand” their own body and health, for example.

On top of that, Johnson and Johnson have used AI in different medical tools too. That started back in 2021, with a tool to help ear, nose, and throat specialists better treat chronic sinusitis.

As per Reuters, the tool – the TruDi Navigation System – was blamed in a number of unconfirmed reports of malfunctions in the first few years of the tool being available for use. This includes cases where the TruDi Navigation System apparently misled surgeons as to where the device was located.

AI tool blamed in botched surgeries

This has seen multiple lawsuits being filed against the TruDi Navigation System, with the claimants claiming that AI made the device less safe than before.

“The product was arguably safer before integrating changes in the software to incorporate artificial intelligence than after the software modifications were implemented,” one of the suits, which comes from a stroke victim, alleges.

Reuters reported that the two patients allegedly suffered strokes after a major artery was accidentally injured. However, they were unable to independently verify the claims within the lawsuits.

On top of the two lawsuits, both of which have been filed in Texas, Reuters noted that there are other injuries being reported, too. This includes one where a surgeon mistakenly punctured the base of a patient’s skull.

Integra LifeSciences, which Johnson and Johnson sold the Acclarent and the TruDi Navigation System to, said “there is no credible evidence to show any causal connection between the TruDi Navigation System, AI technology, and any alleged injuries.”

As noted, AI’s use has also impacted other fields. Lawyers in Kansas were fined $12,000 after using AI in their filings, with a judge noting that it “hallucinated legal authority.”